Big Tech wants AI to be regulated. Why do they oppose a California AI bill?

Source: Reuters

Advanced by State Senator Scott Wiener, a Democrat, the proposal would mandate safety testing for many of the most advanced AI models that cost more than $100 million to develop or those that require a defined amount of computing power. Developers of AI software operating in the state would also need to outline methods for turning off the AI models if they go awry, effectively a kill switch.

The bill would also give the state attorney general the power to sue if developers are not compliant, particularly in the event of an ongoing threat, such as the AI taking over government systems like the power grid.

As well, the bill would require developers to hire third-party auditors to assess their safety practices and provide additional protections to whistleblowers speaking out against AI abuses.

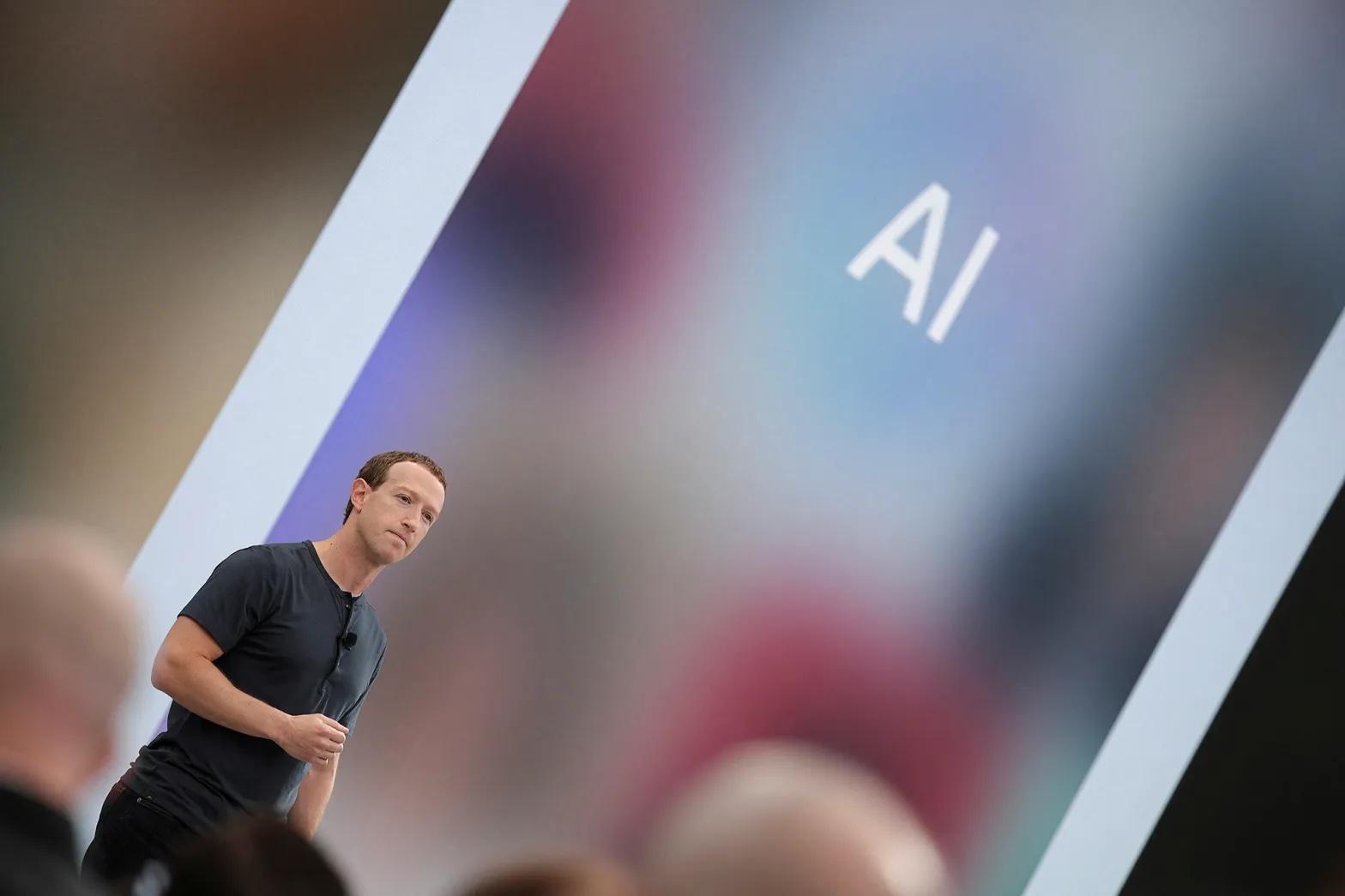

Alphabet's Google and Meta, opens new tab have expressed concerns in letters to Wiener. Meta said the bill threatens to make the state unfavorable to AI development and deployment. The Facebook parent’s chief scientist, Yann LeCun, in a July X post called the bill potentially harmful to research efforts.

Of particular concern is the potential for the bill to apply to open-source AI models. Many technologists believe open-source models are important for creating less risky AI applications more quickly, but Meta and others have fretted that they could be held responsible for policing open-source models if the bill passes. Wiener has said he supports open-source models and one of the recent amendments to the bill raised the standard for which open-sourced models are covered under its provisions.

Read the full article here.